Computer vision get a great benefit from GPU architectures. CUDA can make image processing iteration and local stage processing more efficient. Here a simple code to access Mat from OpenCV using CUDA. With a larger image resolution, CUDA performs faster than CPU.

- First, we need to configure Visual Studio to recognize CUDA kernel (*.cu). See this previous tutorial to add CUDA include, lib and cudart.lib.

- After everything set, now right click Project — Build Customization — check CUDA 6.5.

- Now make a file to your project. Just select <name>.cpp file then rename it to <name>.cu.

- We need to set type file for .cu. Go to properties .cu file — File Type — select CUDA C++.

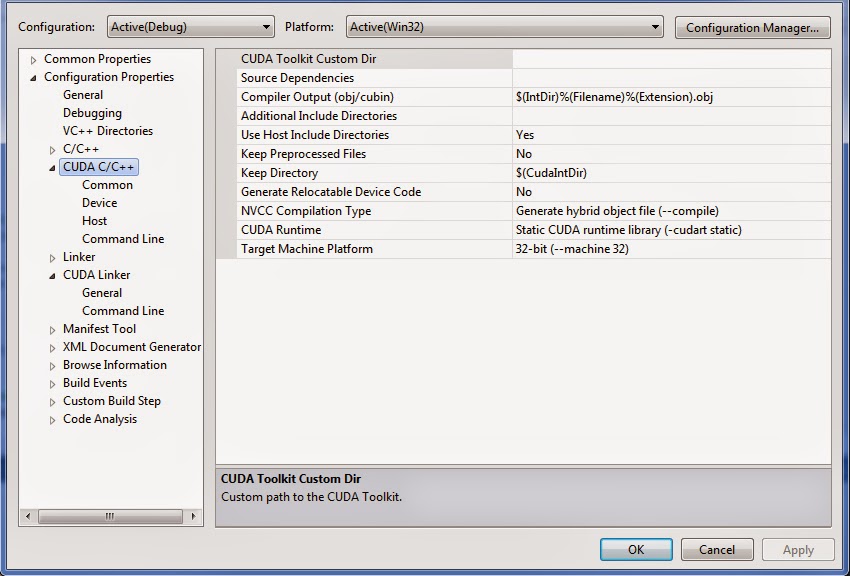

- After you set that this option will show up on Project properties.

- All set up, now make a header for that .cu file, just like making a header for .cpp.

We will use these header to work with CUDA and OpenCV.

#include <opencv\cv.h>

#include <opencv\highgui.h>

#include <opencv2\gpu\gpu.hpp>

#include <cuda_runtime.h>

#include <device_launch_parameters.h>

Before we use CUDA, lets check whether CUDA is available or not for our device. If this function return true then we can go on to the next code. If false, then we must buy device with CUDA support!

// Check CUDA support and device

bool CheckCUDA()

{

int deviceCount = 0;

cudaError_t error = cudaGetDeviceCount(&deviceCount);

if (error == 0)

return true;

else

return false;

}

Now we make a kernel inside the .cu file we made before.

// CUDA kernel that threshold a BGR image with given value,

// ex: B = 127, G = 127, R = 127

// Result is a grayscale-mask image

// if pixel passed the threshold = 255 otherwise = 0.

__global__ void Compute(

uchar* image, // bgr image 3D

uchar* mask, // mask image 2D

int* threshold, // threshold

int width, int height, // size image

int step3D, int step2D) // step 2D and 3D

{

// calculate index coordinate x,y

const int xIndex = threadIdx.x + blockIdx.x * blockDim.x;

const int yIndex = threadIdx.y + blockIdx.y * blockDim.y;

// a rule so index never goes out of bound

if ((xIndex < width) && (yIndex < height))

{

// calculate index for color and gray step

const int colorIdx = yIndex * step3D + (3 * xIndex);

const int grayIdx = yIndex * step2D + xIndex;

// initialize mask to zero

mask[grayIdx] = static_cast<uchar>(0);

// get value per channel

const int blue = static_cast<int>(image[colorIdx + 0] );

const int green = static_cast<int>(image[colorIdx + 1]);

const int red = static_cast<int>(image[colorIdx + 2]);

// begin thresholding

if (blue > threshold[0] && green > threshold[1] && red > threshold[2])

mask[grayIdx] = static_cast<uchar>(255);

}

}

This is how we call that kernel in our main program. Assume we already have an image store in Mat image.

Mat image; // image data that store in Mat

Mat mask; // mask image result after thresholding

// define the threshold

int* thresh = new int[3];

thresh[0] = 127; thresh[1] = 127; thresh[2] = 127;

int* dThresh; // threshold data

uchar* dImage; // pointer image data

uchar* dMask; // pointer mask data

dim3 blockD; // BlockDim for CUDA : Number of thread per block

dim3 gridD; // GridDim for CUDA : Number of block per grid

intSize; // size for int data

int colorSize; // size for UINT8 3D array

int graySize; // size for UINT8 2D array

// Initialize threshold , 3D image color and 2D image color size

cvtColor(image, mask, CV_BGR2GRAY);

intSize = 3 * sizeof(int);

colorSize = image.rows * image.step;

graySize = mask.rows * mask.step;

// Define block size and grid size

blockD = dim3(32, 32);

gridD = dim3(image.cols / blockD.x, image.rows / blockD.y);

// Create allocation memory to store threshold, image and mask data

cudaMalloc((void**)&dThresh, intSize);

cudaMalloc((void**)&dImage, colorSize);

cudaMalloc((void**)&dMask, graySize);

// Copy threshold data and image data from CPU to GPU

cudaMemcpy(dThresh, thresh, intSize, cudaMemcpyHostToDevice);

cudaMemcpy(dImage, image.ptr(), colorSize, cudaMemcpyHostToDevice);

// Compute threshold with kernel

Compute<<<gridD, blockD>>>(

dImage, dMask, dThresh,

image.cols, image.rows, image.step, mask.step);

// download result mask from GPU to CPU

cudaMemcpy(mask.ptr(), dMask, graySize, cudaMemcpyDeviceToHost);// show mask

imshow(“ThresholdBox”, mask);

waitKey(0);

That is it!